Pioneering the future of volumetric video

- Dr Ollie Feroze

- Jul 23, 2024

- 3 min read

We’re thrilled to have been awarded a share of £1.2 million in funding to take part in MyWorld’s CR&D 2 programme, in partnership with Digital Catapult. We’ll be working with the University of Bristol to pioneer advancements in volumetric video technology and pushing forward capabilities for live performances to be broadcast seamlessly into virtual spaces. Read on as Dr Ollie Feroze, Head of R&D at Condense, delves into the project in more detail.

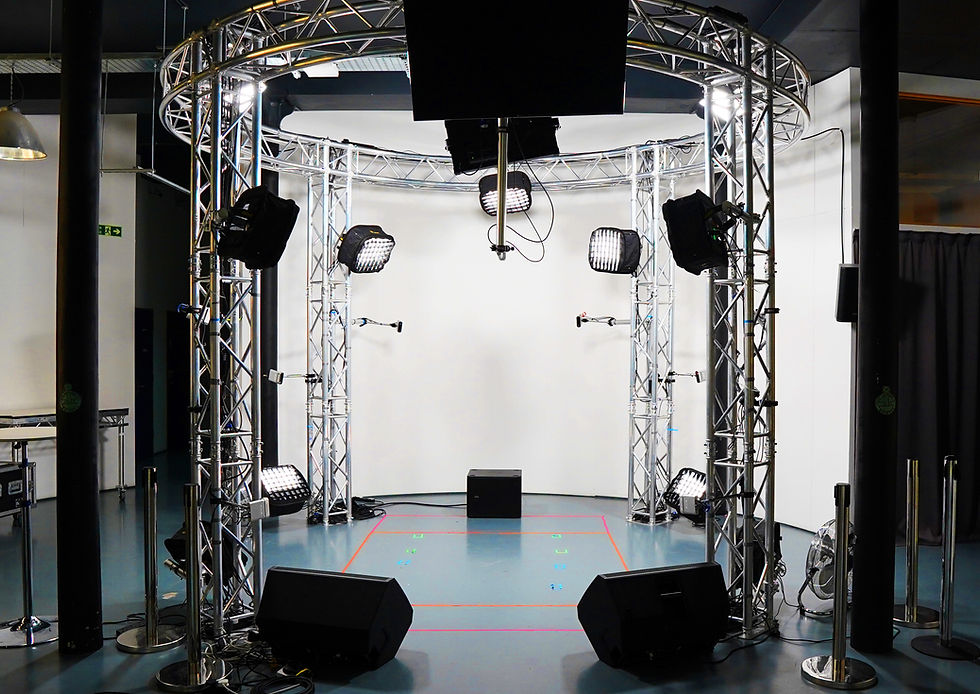

The volumetric capture rig at Condense's studios in Bristol

Embracing Neural Representations

Earlier this month, we started work on our recently awarded CR&D 2 project, Implicit Neural Representation for Volumetric Video Workflows.

At Condense, we believe neural representations to be a step-change in the fields of computer vision and graphics. Unlike traditional methods that rely on explicit geometric models and high-complexity data formats, neural representations use implicit neural networks to encode 3D models and 2D videos.

This new approach offers significant advantages, including more efficient data representations, enhanced visual quality, superior scalability, and reduced computational complexity.

The goal is to make high-quality volumetric video technology more accessible and scalable, with the potential of transforming the way we deliver immersive content to audiences. We’re really happy to be starting this project to explore how to incorporate this technology into our real-time pipelines. Moreover, we get to team up again with the Visual Information laboratory (VI-Lab) at the University of Bristol to do it!

Here’s what we’ll be exploring over the next 12 months.

Data Acquisition and Dataset Creation

At Condense, we have strong links with research institutions, and we really value the open and collaborative processes that universities work under. As part of this project, we will be building and making available a large dataset that will be open to researchers to train and validate their work.

Our team will develop tools for accessing, distributing, and processing this data, ensuring it meets the project's diversity requirements. We will capture data both in studio settings and remote locations to create a comprehensive multi-view video database.

Moving over to the cloud

One of the benefits of neural representations is their compression efficiency. We plan to leverage this to transfer the computationally intensive fusion process (the dynamic creation of 3D meshes and textures) from local hardware to the cloud.

This involves designing high-bandwidth protocols for rig-to-cloud data streaming and creating a fault-tolerant, scalable pipeline. By leveraging cloud infrastructure, we aim to enhance the scalability, reliability, and cost-efficiency of volumetric video processing.

Multi-Viewpoint Video

This part of the project, led by the VI-Lab, is dedicated to designing and optimising an INR-based representation model for multi-viewpoint video data. The team will focus on refining the model architecture, developing loss functions, and evaluating the model's performance in representing multi-view camera data efficiently. This will enable the efficient off-site transmission of raw image data for later processing.

Free-Viewpoint Volumetric Video

Expanding on the multi-viewpoint model, the VI-Lab aims to create an advanced INR model for free-viewpoint volumetric content. The focus will be on modifying the architecture to support dynamic scenes, designing interpolation networks, and optimising the model offline. This model will improve perceptual quality and data efficiency, crucial for high-quality volumetric video rendering.

Finalising On-Site Hardware Requirements

The final component involves evaluating the performance of our INR models against high-bandwidth protocols and integrating them into our volumetric video delivery system. We will investigate embedding the free-viewpoint INR renderer into our Condense Live platform, ensuring seamless operation and high-quality video delivery. This will culminate in a live event to demonstrate the integrated system.

Watch this space

By leveraging implicit neural representations and cloud-based fusion, we aim to make high-quality, scalable volumetric video technology accessible to media organisations worldwide.

At Condense, our core business is virtual live events, integrating live volumetric video into virtual spaces, with the ultimate aim of bringing together the worlds of live music and social gaming.

As we advance through the various elements of this project, we are excited about the potential to drive us closer to our vision of bringing the real world into games, and to bring live events to audiences anywhere. The University of Bristol will also be publishing academic papers on this collaborative research and publishing the data for other researchers to use.

Stay tuned for more updates on our journey towards revolutionising immersive media.